Writer and organizer: Women in AI Benelux

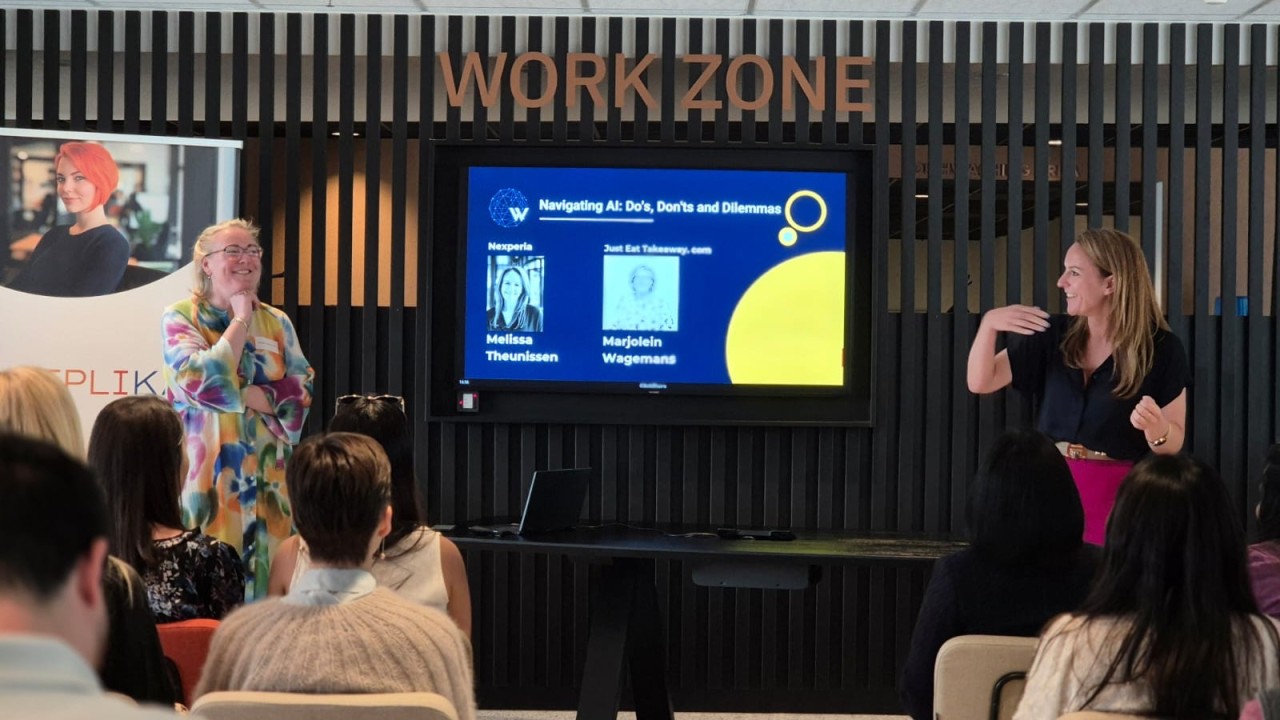

Who we heard from:

Melissa Theunissen, Director Legal at Nexperia , and Marjolein Wagemans, Director Global Data Protection & Digital Confidence at Just Eat Takeaway.com, shared how the EU AI Act is already influencing product development and legal strategy — and unpacked the ethical dilemmas and blind spots emerging in AI systems.

What we explored: AI decisions and the risk lens

From traffic routing to employee recruitment, one key question surfaced: Where should AI stop — and human judgment begin?

The EU AI Act is the world’s first legislative framework to classify AI by risk level — from minimal to unacceptable. That means:

- High-risk systems (like border control, hiring tools, and law enforcement) face stricter regulations.

- All players — including providers, deployers, developers, distributors, and importers — can be held accountable, especially if they alter or rebrand AI systems.

- Transparency is essential: people must be informed when they’re interacting with AI.

“Accountability is for everyone who develops AI tools — even if you are a small start-up,” said Melissa Theunissen. “It’s for anyone shaping how AI is used.”

Key takeaways: Lessons from legal grey zones

One standout moment? A deep dive into how the AI Act legally defines "emotion."

What counts: Affective states like fear, anger, or joy. Emotion should be understood in a wide sense and not interpreted restrictively.

What doesn’t: Physical distress — like pain or fatigue

“In sensitive domains like healthcare or security, you need to be aware of this interpretation to avoid AI overlooking or misreading critical signals — creating serious gaps in decision-making,” said Marjolein Wagemans.

We also explored how the GDPR and the AI Act intersect. Both prioritise fairness, transparency, and accountability:

- GDPR = protection of personal data

- AI Act = impact of AI systems on individuals and society

Together, they offer a strong compliance toolkit — but navigating both requires care, context, and clarity.

Take this back to your team:

- Map your AI systems and how they interact with users

- Assess risks from both ethical and operational angles

- Take responsibility: flag unvetted tools or questionable practices (e.g., event recordings that may expose confidential data)

- Build a governance model grounded in your values

- Document your decisions — even the trade-offs

One attendee Shahin Habbah even shared his own custom GPT design to test whether an AI system used for recruitment meets AI compliance standards. If you're curious, the link is in the comments.

The big lesson?

The conversations we have today will shape how AI fits into our workplaces, hospitals, borders, and social lives tomorrow. From candidate screening to clinical care, compliance isn’t a tick-box exercise — it’s a human decision.

Ever wonder what goes into making the tiny chips powering humanoid robots?

We also heard from Khaoula Mahzouli, Front-end/Back-end Interaction Specialist at Nexperia, who walked us through the chip making process — and how it’s evolving to meet AI demands more sustainably.

At Nexperia, chip production involves more than 400 steps, transforming silicon wafers into finished chips over a four-month period — shrinking features from 1 micron to 50 nanometers.

Why does this matter? By 2030, global chip demand is expected to reach one trillion units. For context: a single humanoid robot uses over 20,000 chips.

And these chips are getting more sustainable:

- Laser-based stealth dicing increases functional chip yield by 20%, using internal laser cuts to reduce breakage and save wafer space

- Precious metal recycling is nearing 100%, recovering gold and copper from production waste

- Water-saving processes reuse and recycle manufacturing water — enough to power 210 Dutch homes and cut CO₂ emissions equivalent to 150 cars

This is how hardware drives impact — from robotics to smarter systems.